We frequently make the mistake of thinking of AI as ‘just another technology’.

We arrive at the vague conclusion that AI will automate some jobs and augment others.

But there’s a larger, less understood, nuance to understanding the potential of AI.

AI - particularly, autonomous AI agents - are goal-seeking. Goal-seeking technologies are unique. They take over planning and resource allocation capabilities, and in doing so, restructure how work is organized and executed.

Last Sunday, I wrote AI won’t eat your job, but it will eat your salary - essentially explaining that even if workers retain their jobs, their ability to charge a skill premium for it will reduce because of AI.

In other words…

“AI won’t take your job, but it will take your ability to charge a premium for it.”

In today’s post, I talk about a second less-understood impact of AI:

“AI won’t take your job, but it will take away the organizational power and mobility associated with it.”

Goal-seeking technologies impact mobility and power relationships that help you move vertically and laterally within an organization.

In other words…

“AI won't eat your job, but it will eat your promotion.”

Through this analysis, we look at three key ideas:

How goal-seeking technologies are fundamentally different

How goal-seeking AI restructures goal-seeking organizations

How goal-seeking technologies impact power dynamics within organizations

Goal-seeking: The human advantage against tech

When thinking about the impact of AI, most analysis looks to previous technological shifts and tries to apply the same lens to AI.

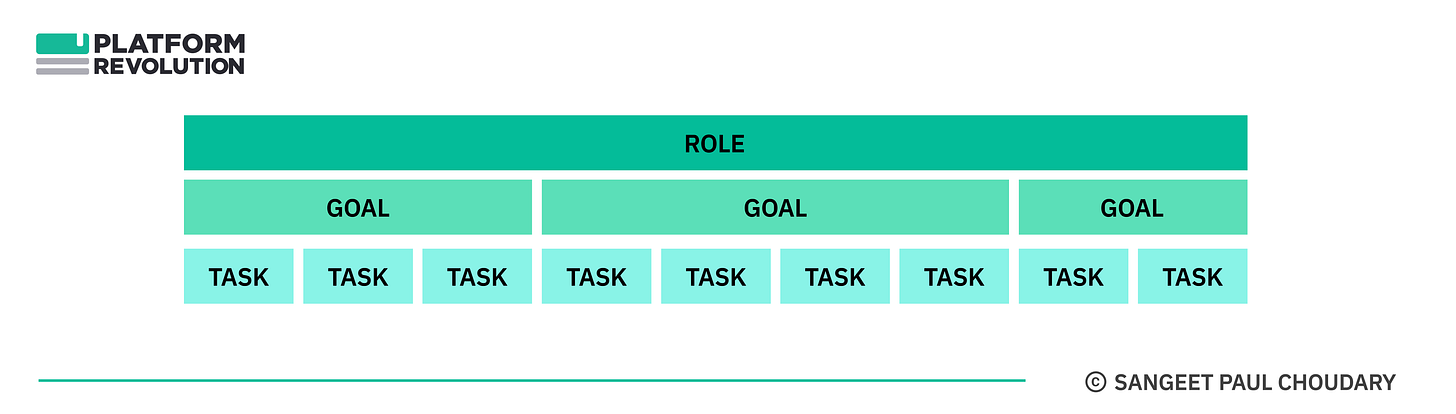

One way to think about the impact of new technologies on jobs is to think about jobs as bundles of tasks.

As I explain in Slow-burn AI:

Every job is a bundle of tasks.

Every new technology wave (including the ongoing rise of Gen AI) attacks this bundle.

New technology may substitute a specific task (Automation) or it may complement a specific task (Augmentation)

Extend this analogy far enough, and you get this:

Once technology has substituted all tasks in a job bundle, it can effectively displace the job itself.

Of course, there are limits to this logic. This can only be true for a small number of jobs, which involve task execution only.

But most jobs require a lot more than mere task execution.

They require ‘getting things done’. They require achievement of objectives, accomplishment of outcomes.

In other words, most jobs involve goal-seeking.

This is precisely why previous generations of technologies haven’t fully substituted most jobs. They chip away at tasks in the job bundle without really substituting the job entirely.

Because humans retain their right to play because of their ability to plan and sequence tasks together to achieve goals.

In most previous instances, technology augments humans far more than automating an entire job away.

And that is because humans possess a unique advantage: goal-seeking.

AI Agents - Why this time is different

Autonomous AI agents provide the first instance of goal-seeking, self-learning, path-finding, path-adjusting technologies.

LLMs, as we use them today, operate on the technology paradigm we are all familiar with - a tool that works in response to human input to deliver an output.

Agents level it up several notches!

Let’s say you’re responsible for managing corporate travel.

An LLM can help generate a list of interesting destinations and an itinerary that meets certain constraints.

An AI agent can operate with far more complexity and look up the top-rated hotel with available rooms during a specified period, within a specific budget and complete the task of making the reservation.

An autonomous AI agent can take this several steps further by learning about your context over time and finding and booking the hotel that best meets your travel preferences and constraints.

First, AI agents are goal-seeking:

As I explain in AI won’t eat your job, but it will eat your salary:

Agents are goal-seeking, and that’s what makes them different. While most technology aims at task substitution, agents go beyond tasks to seek goals.

Every agent operates with at least one goal (and possibly more than one).

In order to accomplish this goal, an agent must (1) scan the environment, (2) plan and deconstruct the goal into constituent tasks, and (3) act out the plan leveraging other agents and digital resources.

Second, autonomous AI agents learn continuously to get better at goal-seeking.

Learning involves both short-term, in-context learning as well as long-term cumulative memory. Much like rational human actors, agents can reflect on their actions and results and refine their path towards goal-seeking in response.

Finally, agents can call agents, paving the way for hierarchical organization of work.

Agents can call agents; it’s agents all the way down. A travel-planning agent can call a hotel booking agent and an activity planning agent and coordinate across the two (and more) to accomplish its overall goal.

Now, it’s important to throw in the all-important caveat here: Agents are still fairly unsophisticated. They have high variability in outcomes and high error rates. If LLMs hallucinate, agents which compound the capabilities of LLMs also compound their hallucinations.

But the landscape of AI agents is evolving rapidly and that’s why it’s important to understand how goal-seeking agents impact organizations. Early examples of agents include AutoGPT, AgentGPT, and SuperAGI. I’ve also included real-world examples of companies already providing and deploying this, further below.

But first, let’s get into the impact of goal-seeking technologies on organizations.

Automation substitutes tasks, AI agents substitute goals

Goal-seeking technologies, though early, are evolving rapidly and present a massive step-change in what’s possible.

That step-change, more specifically, is the ability for technology to substitute not just tasks but actual goals.

Every goal is a bundle of tasks, as the travel-planning example above illustrates. Goal-seeking technologies - in this case, autonomous AI agents - rebundle tasks and, through that, reorganize the hierarchical organization of tasks.

In effect, goal-seeking technologies are uniquely positioned to rewire how organizations work.

Unbundling and rebundling organizations

How do AI agents rewire today’s organizations?

To understand that, let’s return to the foundational principles of unbundling and rebundling.

Every goal (e.g. plan my travel) is a bundle of tasks.

Technology unbundles this bundle and creates substitutes and complements for specific tasks.

Finally, a combination of human effort and technology rebundles these tasks towards the goal.

Now, goals do not exist independently, they are part of a larger system - an organization of goals.

Organizational units of goal-seeking

Organizations are goal-seeking.

There are two units of execution and goal-seeking within an organization: The role and the team.

Individual work is accomplished at the unit of a particular role. Teamwork is accomplished at the unit of a particular team.

Goal-seeking at the level of roles and at the level of teams is recombined through projects and workflows (and incentives) into eventual goal-seeking at the organizational level.

Roles and Teams as bundles of goals

Let’s unpack this further!

A role is a bundle of goals.

Over time, effective performance of a role within an organization involves effectively seeking goals (allotted to that role) which align with overall organizational goals.

A team, likewise, is a bundle of goals.

Successful teams seek goals which ladder up to cumulatively help achieve organizational goals.

Unbundling and rebundling organizational units

AI agents are the first instance of technology directly attacking and substituting goals within a role or a team.

In doing so, they directly impact power dynamics within an organization, empowering some roles and weakening others, empowering some teams and weakening others.

Let’s look at the effects of AI agents on teams and roles:

1. Scope of the role

Let’s start by looking at individual roles within an organization.

Consider a role which performs three goals, each of which constitute smaller underlying tasks.

As we’ve seen before, tasks get bundled into goals and multiple goals are bundled into a role.

When technology substitutes underlying tasks (red boxes below), the scope of the role remains largely unaffected as long as goal-seeking is critical to the performance of the role.

Let’s take the travel planning example again. As new tools come in - travel booking tools, calendar management tools, payment tools etc, - specific tasks get simplified and even substituted by technology, but the goals within which these tasks sit are still managed by humans.

AI agents are different.

AI agents attack the goal. If an agent effectively takes over a goal, that goal no longer needs to be performed by the worker and no longer needs to be bundled into the human-managed role.

Effectively, a goal-seeking AI agent can unbundle a goal from the role.

As a result, the scope of the role is reduced.

This is the first, most obvious effect of goal-seeking technologies entering the organization of the future.

But wait, there’s more…

2. Scope of the team

What is a team?

You might say a team is a collection of individuals and every individual in the team has a role within the organization. Hence, the team is a bundle of roles.

But that’s not entirely true.

A team is a goal-seeking unit. It comes together to achieve a certain goal.

Every individual within the team performs one or more goals that ladder up to the team’s goal.

So, a team is not really a bundle of individuals or roles. It is a bundle of goals. Every member’s contribution to the team is limited to the goals that are relevant to the team.

As an example, consider an individual’s role and its relationship with the individual’s position in a team.

Let’s say an individual with Role A in the organization is responsible for achieving Goal A (greyed out boxes) as part of her role. Team 1 needs a similar capability to perform Goal 1 (greyed out boxes).

Hence, Role A performs Goal 1 in Team 1.

When goal-seeking technologies - AI agents - come in, and effectively perform Goal A for Role A (greyed out boxes) in the illustration above, Role A’s position in Team 1 is challenged.

Goal 1 is unbundled from Role A by an AI agent. Role A no longer retains the right to deliver this goal into Team 1.

Team 1 can now effectively deploy an AI agent to perform Goal 1.

Hence, AI agents affect individual roles in two ways:

First, they reduce the scope of the role. Role A retains Goal B and Goal C and rebundles around that reduced scope. Goal 1 is unbundled from Role A.

Second, they displace the role entirely in a team if the team can now achieve the same goal using an AI agent.

While Role A remains with the organization (i.e. the job isn’t displaced), its position in Team 1 has been effectively displaced.

3. Rebundling of roles

Let’s look at the second order effects of reduction in the scope of the role.

Consider two roles in an organization: Role A and Role B.

Let’s assume agents impact these two roles to different extents:

The scope of Role B is reduced to a far greater extent. Eventually, it may not make sense for the organization to retain Role B at all.

What typically ends up happening in such scenarios is a rebundling of roles. As most goals are unbundled away from Role B, it doesn't make sense to retain an entire role in the organization. The remaining goals that Role B may still perform are now unbundled and rebundled into another role in the organization (Role A, which in this case is also seeing a reduction in scope simultaneously).

Role B is eliminated not because its tasks were fully substituted by technology, nor because its goals were fully substituted by technology, but because the scope of the role no longer justified a separate role.

As AI agents increasingly unbundle goals away from organizational roles, role scopes are reduced and these smaller roles get increasingly rebundled.

4. Reworking power structures

This is where the impact of AI agents becomes most telling.

There's a curious power relationship between Teams and Roles.

Teams have voting rights on the relevance of Roles.

A particular role in the organization may achieve different goals for different teams. During quarterly or annual reviews, these teams cast their votes in favour (or not) of that particular role based on their contributions to the team.

Let’s look at the earlier example again:

In this example, Role A’s position within Team 1 is displaced once an AI agent takes over Goal 1.

At the next quarterly or annual review, Role A has one less cheerleader supporting their contributions.

Now, imagine Role A also contributes Goal B to another team and Goal B also gets substituted by an AI agent.

The fewer teams speaking to a role’s contributions, the lower the negotiating power for that role within the organization.

AI agents and organizational power and mobility

Effectively, as more goals get taken over by AI agents across an organization, several effects start to play out:

The scope of a role progressively reduces as agents take over goals.

The number of teams which a role can contribute to and develop power relations with decreases.

Roles may get rebundled if their constituent goals are increasingly unbundled by AI agents.

A role that is displaced from multiple teams loses organizational power and mobility:

1. Organizational power is a function of voting power of teams. Teams that have outsized voting power have the power to drive upward mobility for a role on the basis of its contribution. If the role’s contribution to the team (one or more goals) is substituted by an AI agent, the role loses that voting power.

2. Lateral mobility (the ability to move horizontally) is a function of visibility and participation across diverse teams. If a role is increasingly moved out of teams because AI agents can perform those specific goals effectively, its lateral mobility gets adversely impacted.

Essentially, a role loses power and mobility because of AI agents not because the role itself can be entirely substituted, but merely because (1) the most important goals offered by that role to (2) teams with outsized voting power get replaced.

And that is how AI lets you keep your job, but it undermines the power and mobility associated with your role.

We’ve seen this before, just not with tech

None of the four effects laid out above are entirely new. We’ve seen this before, with offshoring, outsourcing, on-demand gigs etc. Goals that were previously performed with organizational resources could now be performed using external resources.

But these resources were always human resources. Goal-orientation was a defensible human advantage.

And that’s what’s different this time around. Goal-seeking, for the first time, can be performed by technology.

And with that, the effects above get accelerated.

The rate at which AI agents learn will be much faster than the rate at which an external freelancer or outsourcing agent would.

AI agents can also be embedded into organizations in a way that external human resources can’t. AI agents - constantly learning from conversations, documents, and other workflows - can more effectively achieve organizational fit over the course of interactions with organizational resources.

The cost vs organizational fit bargain in outsourcing work to external human agents may no longer apply in the case of AI agents which can progressively learn and adapt.

Roles unbundle, teams rebundle

Let’s take a break from the scenarios above and look at some anecdotal parallels.

At one of the companies that I’ve advised over the past decade, the corporate development team was looking to hire a head of strategy to aid our expansion into the Chinese market. We ended up hiring a McKinsey partner - born in China, educated in the US - for that role. It sounded like a fancy role and we needed someone fancy to come on board.

The role was a bundle of three goals:

Goal #1: Gain market insight

Goal #2: Develop go-to-market strategy for our global products entering China

Goal #3: Develop market channels relevant to these products

A few months down, someone pointed out that a junior sales manager brought on board was bringing in a lot of valuable insight on the channels that would work best for our products.

Essentially, a junior sales manager drawing a fraction of the salary, was delivering ‘good-enough’ results on Goal #1 and Goal #3.

Suddenly, the seven figure US dollar salary didn’t make sense. The company realised that much of the value created by the strategy role was attributable to Goal #1 and Goal #3 but the role into which these goals had been bundled was being priced based on Goal #2.

Those three goals were now rebundled across (1) A two-member team comprising the in-country junior sales manager (Goal #1, #3) and a corporate HQ strategy manager (Goal #2), with (2) some of the strategic leadership of the role also being rolled into the China country manager.

This unbundling and rebundling of roles and teams is constantly at play in organizations. Roles may get unbundled and specific goals may be taken away from a role bundle because of underperformance within that role bundle. They may then be rebundled into teams which bring together the best specialists.

Conversely, task forces (teams) set up to address a bundle of goals may soon realize that some specific goals may need a dedicated resource (role).

All this is to say that this cycle of unbundling and rebundling across roles and teams is inherent to the organization of work.

AI isn’t fundamentally changing goal-seeking and resource allocation. It is merely inserting itself into the organization and re-organization of work.

Economists are too focused on correlating technological advancements and unemployment.

But they often miss the argument that tech advancements could retain jobs and yet hollow them out.

Reimagining the AI-led organization

We’re at the dawn of the rise of goal-seeking technologies.

Future AI-led organizations will organize themselves as fleets of AI agents. Hierarchies of agents will achieve complex goal-seeking to further organizational goals.

AI agents will need to interact with each other and such interaction will require the emergence of new protocols to streamline agent communication.

Agents will specialize by vertical. Here’s an example of an agent-led organization for education, for instance.

Marketplaces will emerge to organize a growing market of autonomous AI agents, allowing you to hire AI agents much like you hire freelance agents on online work platforms like Upwork or Fiverr today.

Agent compliance and liability will emerge as a huge opportunity. When AI agents run amok, the few players who take over liability and compliance management will benefit from outsized gains.

The future is already here. Check out alphakit.ai or Relevance AI, for instance.

It’s just not evenly distributed.

To summarize in 2 points,

1. Substitution of Goals in Roles and Teams by Goal Seeking Autonomous AI Agents, leading to minimal Goals & Tasks (which may also have tech augmentation) assigned to roles in an organization leading to minimal power and mobility for roles.

2. When we think from a perspective of outsourcing, these autonomous AI Agents are like Employees in the Outsourcing company and these outsourcing companies are liable for the quality of work delivered by these autonomous AI agents, similar to how they are liable to the quality of work done by their employees now.

Great post. Thanks for the Flint shout out :)