“Two ways. Gradually, then suddenly.”

― Ernest Hemingway, The Sun Also Rises

AI is everywhere, apparently! Except in the enterprise.

Enterprises remain uncertain about where to invest in AI and, crucially, where AI truly delivers competitive advantage.

This playbook is aimed both at the enterprise and the providers looking to serve it.

If you really want to succeed with AI adoption in the enterprise, you need to understand:

Micro: How AI actually unbundles work and creates new opportunities to rebundle it elsewhere

Context: The mis-steps that enterprises have made over the past decade in their quest for digital transformation

Macro: The overall forces in an enterprise ecosystem that determine where power, inertia, and decisions sit and where they move with new technology coming in

We start with the micro logic, layer in the enterprise context, and then expand on the macro opportunity.

This playbook is set up in eight parts:

The logic: The impact of Gen AI on the enterprise plays out through a cycle of unbundling of productive work away from human ‘service’ and rebundling of productive work into ‘software’.

The opportunity: This presents a unique opportunity, which I call here, Service-as-a-software - the ability to absorb and rebundle complex work into software.

The enterprise context: In 2024, there is a certain enterprise context that needs to be understood, one which doesn’t simply buy shiny innovation as it did in the ZIRP-era. Performance matters, and this section talks about what will get us to enterprise-grade performance with Gen AI.

Workflow capture: Based on the economics of Enterprise AI (as I explain below), I believe that winners will not win through task automation (most commonly associated with AI) but through workflow capture.

Business model advantage: Workflow capture presents a unique business model advantage, similar to the rise of IoT in industrial services - the ability to charge for performance, not for product. And that, I believe, will be a key adoption driver.

Threats and discontinuities: Improvements in AI are discontinuous. Things don’t work forever, and then they rapidly improve in a span of a few months. If you thought enterprise software was already crowded, hold on to your hat. This is a much wilder ride!

Moats, control points, and enterprise account expansion: The discontinuous nature of AI improvements will constantly enable new challengers to capture workflows away from existing players. How do you create a moat and expand the account in the midst of lateral attacks?

Winners and losers: Finally, what determines winners and losers in Enterprise AI?

This is long, but juicy.

Let’s dive in!

I’ve set this up as an ebook that you can download and read later if you want to.

Part 1 - The logic

Enterprise AI: Unbundling and rebundling work

Let’s start with some first principles thinking.

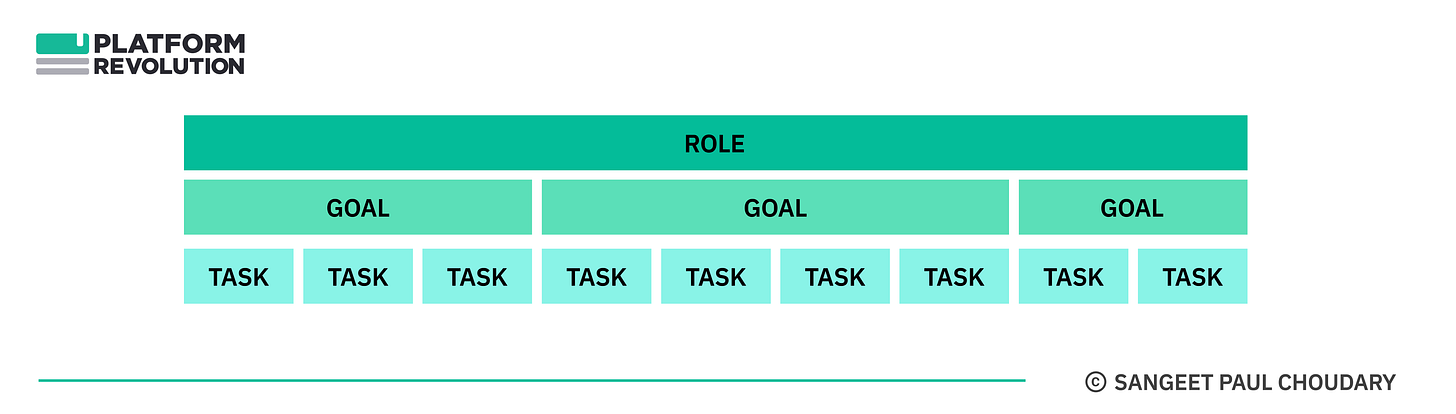

As I’ve explained before in How AI agents rewire the organization, work is a bundle of tasks, which are performed towards specific goals.

Tasks are the ‘atomic unit’ of any work done in the enterprise. Tasks may be performed as a human service, or may be performed by software, towards achieving a goal.

For instance, imagine a workflow where a procurement manager needs to execute performance-based payments across two software systems. Software system #1 measures work performance. Using this output, the manager reviews contracts and discusses internally (all human tasks) before finally executing a payment through software system #2.

While most rote services have been automated by software, two important categories of work still retain the ‘human’ advantage:

The key decisions and actions that move the workflow along are owned by knowledge workers who need to make decisions and execute based on various forms of information and leveraging their unique insight.

Goal-seeking and achievement of ‘workflow performance’ are owned by managers.

Previous waves of technology have chipped away at small tasks but never replaced entire workflows because critical decisions and managerial goal-seeking were always retained as ‘services’ performed by humans (whether inside or outside the organization).

Gen AI changes this by attacking exactly these two types of work that hold workflows together in enterprises - knowledge work and managerial work.

The Gen AI advantage - LLMs and agents

To understand the potential of Gen AI at substituting knowledge work and managerial work, let’s take the example of corporate travel booking.

Let’s say you’re responsible for managing corporate travel.

As new tools come in - travel booking tools, calendar management tools etc, - specific tasks get simplified and substituted by technology, but the decisions and the goals binding these tasks together are still managed by humans.

However, this changes with Gen AI.

LLMs take over knowledge work and can take over many of the decisions involved. AI agents - the first instance of goal-seeking technologies - take over goal-seeking.

In the corporate travel booking example:

An LLM (Large language model) can help generate a list of relevant destinations and an itinerary that meets certain constraints. This is knowledge work.

An AI agent can look up the top-rated hotel with available rooms, activity providers and restaurants that match the itinerary within a specific budget and complete the task of making necessary reservations. This is managerial work.

An autonomous AI agent can further learn about your corporate context and travel preferences over time and finding and booking hotels and activities that best meet those preferences and constraints. This is managerial work with learning advantages.

LLMs have the potential to absorb complex knowledge work into software.

Agents have the ability to absorb managerial work (with constant learning) into software.

In doing this, Gen AI has the unique advantage to unbundle services from humans (organizational roles) and rebundle them into software.

You’ve heard of Software-as-a-service.

With Gen AI, we can now deliver Service-as-a-software.

Part 2 - The opportunity

The evidence and the hype

Enterprise AI, today, sports some evidence, and a lot of hype.

But directionally, one thing is becoming increasingly clear -work is getting unbundled from workers and getting rebundled into software.

On a recent earnings call, C3.AI gave the example of one of their customers - DLA Piper, a top law firm - moving tasks as knowledge-intensive as due diligence of contracts to AI and gaining an 80% reduction in effort.

Peer-reviewed research already suggests that a context-rich chatbot performs better than highly trained radiologists on some tasks, at 90% reduction in time and 99% reduction in costs.

Earlier this year, Klarna famously claimed that its chatbot was doing the work of 700 full-time agents, potentially driving $40M in profits.

And for some correlation to support the task-substitution argument above, the number of writing jobs posted on Upwork have declined by 33% since the arrival of ChatGPT, while translation and customer support jobs have declined 19% and 16% respectively, according to an analysis of 5M jobs.

There’s some evidence - and a lot of hype - but directionally, this points to a world where we move to Service-as-a-software.

Service-as-a-Software

The adoption of Enterprise AI will see a shift from service-dominant workflows, where most tasks are performed by humans to software-dominant workflows, where critical knowledge and managerial work are absorbed by AI.

At this point, the service can be delivered as a software.

1. Service-Dominant Workflow: This shifts starts with an initial stage where workflows are mostly dependent on human decision-making and manual actions, with software used for simpler tasks like data processing or automation.

2. Unbundling: As AI’s ability to perform specific tasks improves, these workflows are examined to determine more efficient structures, and reduce reliance on manual effort.

3. Componentizing (Service-as-a-Software): As AI is included into the current workflow, specific tasks get componentized and are now available entirely as software modules.

They can continue sitting in the same workflow,

or (and this is important),

plug-and-play into other workflows now that they are componentized as software and are likely just an API call away.

4. Rebundling: This componentization of tasks enables the enterprise to reimagine workflows and (more importantly) decision sequences. Workflow efficiency and organizational power structures determine whether the tasks are rebundled into the earlier workflows or whether entirely new ways to get the job done emerge.

5. Software-Dominant Workflow: Once the rebundling is complete, a software-dominant workflow emerges, which can now be performed more efficiently using software (with some services stitched in initially).

With every improvement in technology, we keep going back to Step 2 to re-evaluate which of the remaining tasks in the software-dominant workflow can be further absorbed into software.

Over time, most of the tasks are captured into software.

The service is now effectively delivered as software.

What we need to believe in

There are two key considerations that determine to what extent we see this shift play out:

Which tasks get absorbed into AI and when?

How good is AI at performing these tasks?

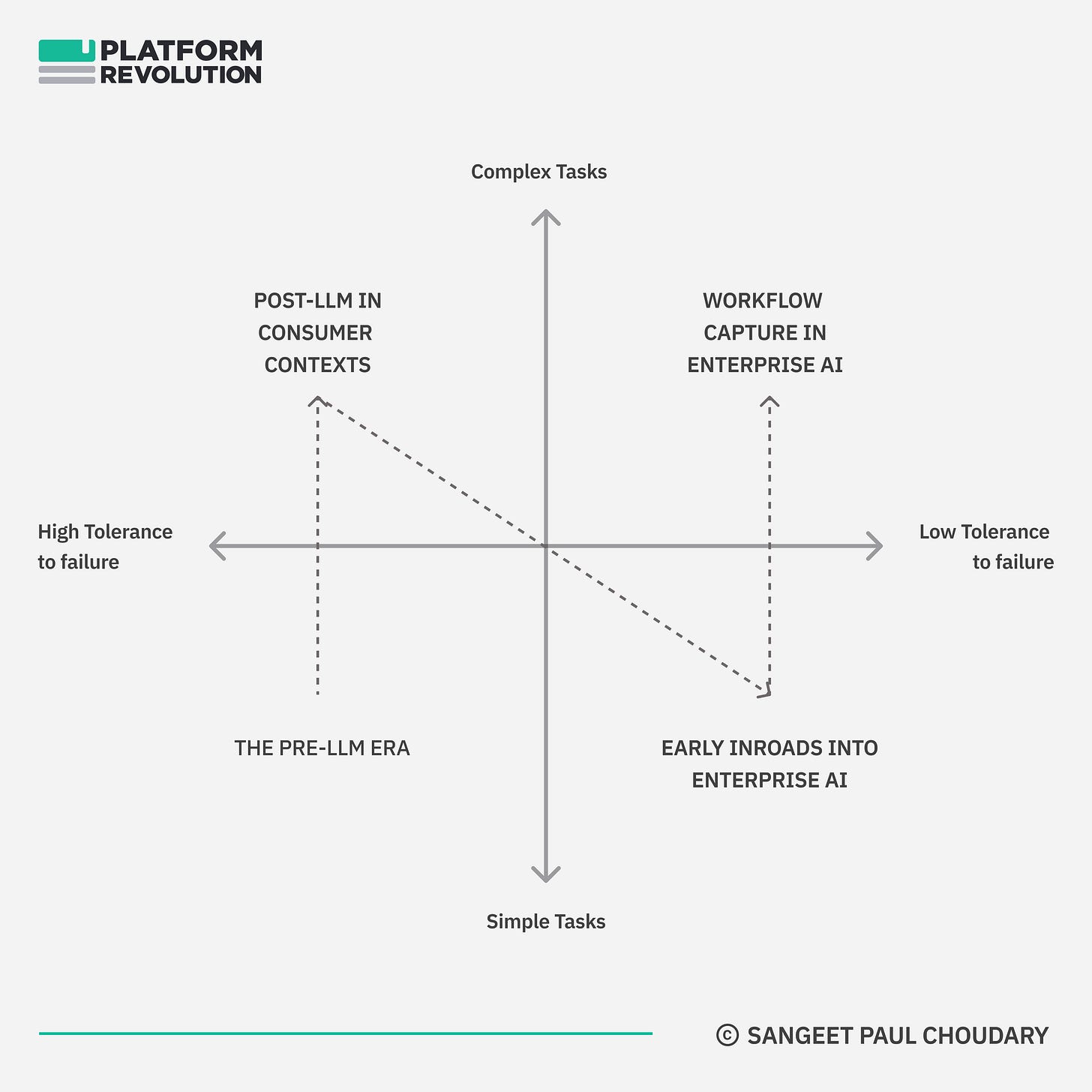

The answer to the first question requires some taxonomy of tasks. Eventually, two things matter - the complexity of the task and the enterprise tolerance to task failure.

Think of early AI, playing bounded games, as where we started in the bottom-left quadrant. Post ChatGPT, we’ve seen rapid advancements moving us progressively upwards into the top-left quadrant.

But most of what’s happening today is still in the ‘shiny consumer toy’ category, addressing tasks with high tolerance to failure - think blog article outline generation vs. healthcare diagnosis.

To get to enterprise adoption, we need to take the evidence from the top-left quadrant and possibly solve simpler problems in the bottom-right, but with greater certainty and some service checks wrapped around it. And as AI continues to improve and standards are set for enterprise-grade performance, the frontier will gradually shift to the top-right.

With that first question out of the way, let’s now turn to the more important second question “How good is AI at performing these tasks?”

But to answer that, let’s first look at some enterprise context.

But first..

Part 3 - The enterprise context

Selling promise vs selling performance

Here’s the problem with the hundreds (thousands?) of AI services firms flooding the market. They’re repeating the same mistake that the copycat design thinking firms did between 2017-21.

They’re all selling promise, not performance.

The design thinking firms got away with it, because they happened to coincide with peak-ZIRP and Covid-era ‘innovation-doesn’t-need-ROI’ mindset. This was an anomaly. ROI considerations have always dominated investment decisions. But with the mind-numbing speed of change that the late 2010s threw us into combined with ZIRP-era cost of capital, FOMO (Fear of missing out) outbid ROI as a decision criterion.

Things are different in 2024 (and likely beyond). Executives are still smarting from the huge investments that yielded nothing beyond walls filled with post-its and shiny, little PoCs (Proof of concept).

AI adoption in the enterprise demands performance, not promise.

So where are we on the performance curve?

To understand that, let’s understand the underlying factors that determine LLM performance.

AI’s broadband moment and the simple economics of LLMs

Let’s move for a moment back to the late 1990s - when you’d wait patiently while your dial-up modem made a series of high-pitched noises - eventually allowing Yahoo Mail to slowly load up.

A few years down - by the early-2000s, - broadband Internet was gaining greater adoption. Performance took off - pages loaded almost instantly, images appear in a flash, and eventually, Netflix could stream entire movies without a glitch.

AI is going through a similar dial-up-to-broadband moment. And the speed at which this plays out over the next 2 years determines when and how AI adoption in the enterprise takes off.

To understand this, let’s look at some simple economics of LLMs. A common performance metric for LLMs is the speed of processing text, which is measured in "tokens per second." A token can be a word, part of a word, or even punctuation—it's the basic unit of data(text) that an LLM processes. Imagine a sentence as a string of tokens that the LLM has to read, understand, and respond to.

In the current "dial-up" phase of AI, the current speed might be 50 to 100 tokens per second. That’s enough to have basic conversations with a chatbot or ask simple questions, but things start getting really slow as tasks become more complex.

But we’re seeing early signs that the broadband era of AI isn't too far. A couple of weeks back, Groq - a startup chip company - reported speeds of 800 tokens per second.

What does this mean for AI? With the ability to process so many tokens so quickly, language models can handle complex tasks in real-time. They can analyze large amounts of text instantly, enabling more sophisticated output.

But this isn’t just about speed. In fact, speed isn’t even important.

The shift from dialup to broadband wasn’t just about faster loading times, it was about fundamentally different web pages. And fundamentally new use cases for what was possible on the internet.

Take software-as-a-service as an example again. There was no way companies would use mission-critical software hosted on the cloud in an era with low speeds. Improving performance fundamentally changed the scope of what was possible.

Improving LLM accuracy

For the internet to really transform off the back of the shift to broadband, a whole new ecosystem needed to improve - front-end technologies, databases, UX design patterns, and so on.

Improving tokens-per-second translates to performance improvements in speed and costs.

But what about LLM accuracy? Isn’t that the main bottleneck to enterprise adoption?

This is where the context window size comes in. The context window size refers to the maximum number of tokens (words, subwords, or characters) that a model can process at once or retain in memory when generating responses or analyzing text.

In other words, it is the length of text (and, hence, possibly the scope of ‘knowledge’) that an LLM can consider when making decisions or predictions.

The longer the context window, the more context (and ‘knowledge’) can be incorporated into LLM output.

Several simultaneous improvements currently underway will converge over the next 2-3 years to increase the size of the context window. These include:

Improving hardware - i.e. improving GPUs and TPUs (faster computation) as well as improving memory technology (more tokens stored in memory).

Improving model architecture - e.g. shift to sparse attention mechanisms (optimizes token processing to focus on the most relevant sections of text)

Improving algorithms - Better training techniques as well as using smaller models to complement larger models

Software improvements, and even more energy-efficient computations

All of these, together, converge to reduce the context window size.

In summary, four things are happening simultaneously:

Cost of training base models is falling

The cost of inference itself is falling (falling inference costs per token)

Tokens per second are on the rise (possibly by 10-20x)

Context window size and utilization improves

All these factors work together to improve both the cost and speed of LLMs, but most importantly, the accuracy of LLMs. The larger the context window size, the greater context it can process, enabling (assuming sufficient feedback and fine-tuning) greater accuracy.

Part 4 - Workflow capture

The economics of Enterprise AI

With LLMs, the cost of performing an individual task goes down (when compared with doing the same task with humans).

But with agents, a far more important shift in economics plays out.

Ronald Coase - in his seminal 1937 paper "The Nature of the Firm" - proposed that work is performed within organizations (firms) because it involves lower transaction costs compared to the open market. Transaction costs include search costs, bargaining costs, enforcement costs, and the costs of managing and coordinating a workforce.

All transaction costs above can be absorbed by a sophisticated AI agent. Whether a particular task is performed by your AI, or a third party AI, or some other resource, an agent can work across them to capture the workflow.

The real opportunity in Enterprise AI is not to deliver software but to deliver entire workflows.

Which workflows to capture and when

At this point, the skeptics among us would object: no, you cannot take over the entire workflow. There’s going to be a human-in-the-loop, so you are back to selling software. Or to becoming an outsourced services firm, which happens to use a bit of software.

Here’s where service-as-a-software is different.

If we are to believe that the four forces playing out above - falling training costs, falling inference costs, more tokens per second, larger context window - are all going to play out over the coming years, we are going to see an increasing range of tasks moving from ‘human’ service to software.

1. Capturing workflows where you can

As an AI services firm, you need to look at a workflow and ask yourself:

Given our current AI suite, is this a workflow we can take in-house and at what costs?

Given our current roadmap, what level of increasing coverage and reducing costs of the workflow do we expect at what point in the roadmap?

2. Capturing workflows where others can

Capturing workflows where you can is only one determining factor.

You also need to fend off threats from lateral attacks and need to watch out for other players capturing adjacent workflows. In short, you need to have a strategy for ‘capturing workflows where others can’.

To figure where to operate, ask yourself:

What are other workflows at our client persona which lie upstream or downstream from our target workflow?

If these workflows were taken over by another player, would they integrate into us or would we integrate into them? Who establishes a more powerful position with the client?

Getting the answer to the second question right is what matters the most.

We’ll dig into it in Part 6 below.

Workflow capture and the adoption advantage

Workflow capture has another advantage.

A major challenge for businesses adopting Software-as-a-Service was the learning curve of using self-serve software.

Enterprises, entrenched in legacy systems, don’t want to avoid workflow disruptions and workforce retraining.

Service-as-a-Software mitigates these concerns.

Enterprise AI service providers can take over the entire workflow and sell the actual work, eliminating the onboarding concerns and issues entirely.

Quite interestingly, this also delivers a strong business model advantage to a Service-as-a-software company.

Once again, if you’re running out of time, download the full playbook here…

Part 5 - Business model advantage

The business model advantage of Enterprise AI

Now that we’ve established the logic of Service-as-a-software and distinguished promise from performance, let’s get to one of the most important advantages of this model.

Every shift in technology changes economics and offers an opportunity to rethink business models. This is going to be essential to winning with Enterprise AI.

Prior technology cycles have leveraged this to their advantage when going to market. Let’s look at a couple of examples.

Business model advantages in Software-as-a-service

Prior to software-as-a-service, software was sold through an upfront CapEx model. Software-as-a-service flipped this, offering software on a subscription basis, moving this from CapEx to OpEx. This was incredibly attractive for customers with cashflow issues.

Enterprise software relied on large, one-time sales and extensive account management. Software-as-a-service players focused on customer acquisition, usage tier expansion, and churn management. The two required fundamentally different organizations with vastly different incentive structures.

Much as product managers would like to believe that software-as-a-service models win because of superior product experience, the pricing and salesforce disadvantages above are the primary reason enterprise software vendors cannot effectively compete with them.

Business model advantages in IoT

A similar shift occurred with IoT, which allowed sensors to be embedded in hardware. Hardware OEMs relied on selling large-scale product contracts and lcoking-in lucrative aftermarket services. For context, in 2001, General Motors generated more profit from $9 billion in aftermarket services than from $150 billion in car sales.

With IoT, a scrappy startup which offered to retrofit sensors to an industrial machinery setup (for free) could now offer monitoring and maintenance services, eating into the lucrative aftermarket segment. What’s more, it flipped the model from reactive interventions to subscription-based monitoring.

However, unlike software-as-a-service, the IoT disruption was temporary for hardware manufacturers. Sensing the potential, these incumbents themselves began proactively embedding sensors in their hardware, creating a new business model based on performance and uptime.

For instance, Rolls-Royce's aerospace division moved from selling jet engines to operating "jet engine-as-a-service", which bills customers based on actual flight usage - engine operating time, hours flown, the types of missions etc.

Business model advantages with Enterprise AI

With the rise of AI, we see a similar opportunity to innovate business models in the enterprise.

A lot of AI is currently sold like software-as-a-service - on usage-based subscription pricing models.

However, with service-as-a-software, there is a natural advantage to move towards a performance-based model, similar to what was seen with IoT and sensors in hardware.

Instead of selling software, this involves selling actual outcomes and performance of work.

This has important implications for pricing.

In the short term, pricing innovation will be determined by nature of service

Services with easily quantifiable outputs can more effectively leverage outcome-based pricing.

Lead generation is a good example. LLMs already manage most of the tasks involved in email outreach - prospect research, email creation, prospect prioritisation, follow-up etc. Selling the entire workflow on a cost-per-lead basis isn’t too far out. Similarly, customer support software can be priced on a cost per support ticket successfully resolved.

Initially, wherever pricing for output is feasible, we’re going to see a glut of entrants vying for the same price.

In the long-term, pricing innovation will be driven by bundling and cross-subsidization

The one thing we know from the history of technology commercialization is that short term point solution pricing is almost always priced out by bundling and cross-subsidization in the long term.

This is particularly relevant for service-as-a-software. Pricing per output for a point solution (a single outcome) may rapidly lead to a race to the bottom.

Long term winners will combine multiple outputs with varying degrees of measurability into a single bundle. For instance, back-office functions like procurement and contracting may be more difficult to price by output but may be more effectively priced as a bundle for end-to-end client management which includes front-office lead generation and customer support but prices for the whole bundle.

Bundling and cross-subsidization will win long term simply because the more quantifiable the value of a unit outcome, the more competitive and crowded that space gets, and the greater the opportunity to win it through cross-subsidization.

Layering on the enterprise context

Today’s AI startups are operating on a software-as-a-service hangover not just in pricing but also in sales.

While software-as-a-service worked on a try-before-you-buy model, selling service-as-a-software will be closer to selling actual services - the best example being the digital transformation services that enterprises have bought over the past decade.

To win, the playbook will be similar to the digital transformation service sales playbook:

Sell the ‘what’s the game and how do we win it’ story to the C-level and BU heads

Deliver a tightly-scoped proof of concept to demonstrate the outcome delivered

Use that initial footing to capture your first workflow. Use that to advance to adjacent workflows, all the time leveraging bundling advantages (more on this in Part 7 below).

A final note on commercial structures

Software-as-a-service has notoriously high operating leverage - there are high fixed costs but very low variable costs as more paying seats come on board.

Service-as-a-software will have higher variable costs because the cost of inference is going to be significant. Margins will be better than services (because compute is cheaper than wages) but worse than pure play software.

On the other hand, because of the performance-based monetization involved, revenues will be less predictable than subscription revenue. However, theoretically, this is where learning advantages of AI come in handy. The more AI learns the customer context, the better it can improve its ability to deliver outcomes. Companies that master this learning flywheel will have offset lower margins with faster account expansion.

Part 6 - Challenges and Threats

Let’s look at two challenges to getting to enterprise-grade AI and one threat when you do get there.

Challenge #1: Being a victim of your own hype

In the near term, the service-as-a-software model could be its own worst enemy.

Back in the ancient pre-ChatGPT days of 2020, ScaleFactor - an AI player looking to create service-as-a-software to absorb accounting into AI - went bust, after raising $100M. Forbes’s forensics had this to say about their ‘AI’:

Instead of producing financial statements, dozens of accountants did most of it manually from ScaleFactor’s Austin headquarters or from an outsourcing office in the Philippines, according to former employees. Some customers say they received books filled with errors, and were forced to rehire accountants, or clean up the mess themselves.

This isn’t as uncommon as you’d expect it to be. Last month, Amazon pulled back JustWalkOut from Amazon Fresh stores. A May 2023 paper reported that Amazon was facing the same issue with its service-as-a-software i.e. it was still largely a service:

Amazon had more than 1,000 people in India working on Just Walk Out as of mid-2022 whose jobs included manually reviewing transactions and labeling images from videos to train Just Walk Out’s machine learning model..

As of mid-2022, Just Walk Out required about 700 human reviews per 1,000 sales, far above an internal target of reducing the number of reviews to between 20 and 50 per 1,000 sales,

The biggest threat to software-as-a-service is that when you mix (1) hype, (2) reckless funding, and (3) disingenuous entrepreneurship, you get the perfect cocktail of an overfunded BPO (business process outsourcing).

With that, service-as-a-software is, well, just a service.

We’ve seen this pattern with consumer tech over the past decade. Cheap capital thrown at negative unit economics which don’t improve with scale (and in some cases, like Moviepass, get worse with scale). If services aren’t progressively absorbed into software, we end up with the same problem here.

Anyone playing in this space should ensure they don’t get swayed by meaningless metrics like Annualized Run Rate. Investors, and even customers, should ask to get a peek under the hood and look at the actual unit economics of delivering service-as-a-software and the roadmap for its improvement. Investors would avoid getting hoodwinked, and customers - who will need to invest significantly in workflow change - can avoid wasting their resources.

Challenge #2: Organizational disconnect

As I explained above, Software-as-a-service relied heavily on UX improvements because of the self-serve nature of its consumption. As a result, designers and engineers worked very closely to develop that edge.

This is already emerging as a second challenge in getting to Enterprise-grade performance.

As I explain in How to win at Gen AI:

The solutions that will truly differentiate through vertical advantage are the ones which will deeply couple model improvements into UX innovation.

This coupling sounds easy but can be very difficult to deliver.

The vast majority of really good model engineers and data scientists are horizontally oriented. They focus on building models that deliver on scale and scope. But this is orthogonal to developing deep empathy for a customer pain point, which is required to deliver model fine-tuning advantages into the UX.

In order to develop strong vertical advantage in Gen AI (and all forms of AI in general), you will need to deliver an org model that ensures rapid development cycles in teams comprising model engineers and UX designers.

Challenge #3: Lateral attacks

Performance improvements in Generative AI are happening in step changes. They are neither linear nor exponential, they are largely discontinuous.

We went from no ability to produce music to producing studio-quality music - with really good songwriting - with a one-line prompt (I’ve created entire playlists of driving music with Udio). We’re going to see similar sudden improvements in service-as-a-software as well.

What does this mean?

We’re headed for an interestingly poised competitive environment where the right to manage the workflow can constantly shift.

Player A may capture a workflow at a customer account. Meanwhile, a step change performance improvement happens in tasks further upstream or downstream from this workflow. A part of the workflow which could not be absorbed into software suddenly gets absorbed into software. All at once, Player B gets into the same customer account by targeting the upstream or downstream workflow, and can eventually move into your workflow.

With service-as-a-software, the surface area of vulnerability is much higher.

And lateral attacks can emerge from any direction.

How do you defend yourself against such attacks?

As I explained in the Generative AI playbook:

You need to own the key control point that delivers primacy of user relationship.

A workflow comprises a vast range of decisions and actions. Yet, there are one or two core decisions and actions that empower the rest of the workflow.

To create a control point using AI, you need to ensure that your AI empowers the core decision and/or the core action in a workflow.

Part 7 - Competitive advantage and enterprise account expansion

Moats in Enterprise AI - Control points and rebundling

Let’s switch back to a slightly more macro lens.

We get that AI is poised to transform enterprise workflows through Service-as-a-Software. But how do you create a dominant position with this? Where do the most important and interesting moats lie?

Again, to make sense of Service-as-a-software, let’s look back at Software-as-a-service for a bit.

Once you succeed with Software-as-a-service, you can successfully establish a control point by owning a key component of the workflow, which is now being routed through the software.

Players that successfully establish a control point are well set up to expand their scope around this control point through API-driven integration. This is a key advantage over enterprise software that scaled through sales-led growth. Scope expansion was dependant on system integrators who partnered with enterprise software vendors and combined multiple workflows in custom implementations to expand scope. These integrations were clunky, simply because the underlying software wasn’t built to be integrated.

This changed with cloud-hosted solutions. Cloud hosting enabled higher workflow interoperability.

This enables greater expansion of scope of a particular solution and centralized ownership of the control point.

Here’s an actual example of how such rebundling compounds adoption for Software-as-a-service, from Monday.com’s earnings report:

Note the green box, which is where the hockey stick takes off as Monday.com increasingly invests in partners, integrations, and eventually an app marketplace.

Essentially, ownership of control points over the workflow, around which complementary capabilities can be rebundled, enables expansion of scope.

This creates a horizontally dominant position in the value chain - the holy grail for all platform businesses.

Rebundling with AI agents as a competitive moat

Service-as-a-software adoption across the enterprise increasingly drives componentization of services. An unbundled service can now exist as an AI capability.

How do we now rebundle these capabilities into larger (and new) workflows?

Very simplistically put, managers in organisations perform the following roles in managing large workflows:

Bundling together different ‘components’ of work performed by different people

Focusing on the ultimate goal, leveraging all these ‘components’

In an enterprise, managers serve as the locus of rebundling.

As LLM adoption in the enterprise (Service-as-a-software) componentizes work, AI agents emerge as an important locus of rebundling.

AI assistants and agents are naturally suited towards expanding horizontally across the value chain.

While AI develops advantage vertically through fine-tuning, the more workflow you can capture, the greater the enterprise context you gain. This allows increasing horizontal expansion.

But in order to capture greater workflow, you need to ensure that the part of the workflow you already capture includes important decisions and actions which

(1) unlock activity into downstream workflows, and

(2) consume outputs from upstream workflows.

The starting point, in the bottom left quadrant, involves a situation where AI adoption in the enterprise is very low.

There are two ways in which AI adoption can increasingly spread across the enterprise and expand the account.

The hub-first path to account expansion

As more work moves from human services to AI, work gets increasingly componentized and opened out so that it can plug into different workflows, both internally and outside the firm.

The player which takes on the most critical components of the workflow - the most important decisions and actions - and moves them to AI is best positioned to establish a hub position into which the rest of the workflow connects. As a hub position emerges, an AI agent can gradually replace more managerial effort at the hub.

The agent-first path to account expansion

As AI agents get trained in the above manner, adoption of AI in new enterprises may even take an agent-first path.

In this case, AI is first leveraged to take on managerial work. This requires a sophisticated AI agent, which is deeply trained in that particular vertical and workflow. In this case, a hub position emerges by virtue of the fact that the agent takes over managerial effort so the manager primarily engages with workflows through the agent.

Eventually, as all work happens through the agent, AI is adopted to take over other parts of work, which now get componentized and available as resources for the agent to use.

The end-state of AI adoption in the enterprise is one where a combination of AI agents and Service-as-a-software componentization transforms the way work is performed.

The bundling advantage flywheel

A successful service-as-a-software player will look to leverage agentic managerial capabilities and expand across multiple workflows in the enterprise.

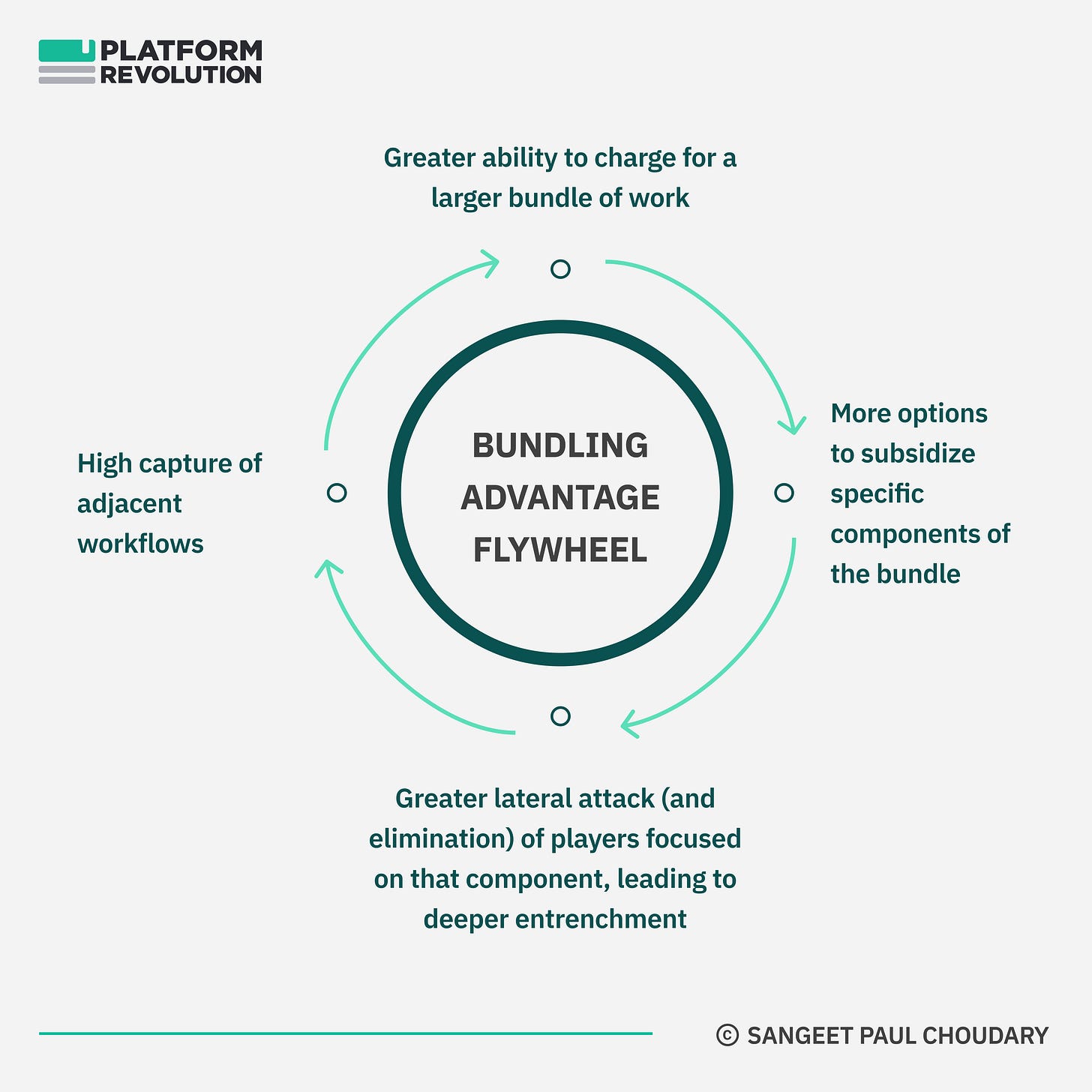

Greater workflow capture through rebundling enables deeper entrenchment with the customer. But it also creates another source of competitive advantage.

The greater the scope of bundling across multiple workflows, the larger the possibilities for cross-subsidization. This creates a natural advantage over providers of point solutions who are charging for that component of work. If you could subsidize a component of work (say component A) and bundle it with another monetizable component B on account of control over a larger workflow, you can effectively replace a player that’s focused entirely on monetizing component A.

Part 8 - Winners and losers

Framing competitive advantage

This brings us back to my favourite question: What determines winners and losers with Enterprise AI?

To answer this question, and to close this playbook, I will open out two final questions - and point to two foundational frameworks that I’ve laid out earlier.

Will incumbents (today’s software companies or services/consulting firms) co-opt the new model or will challengers successfully displace them?

To answer that, look around yourself and try to answer the two questions below:

1. Can challengers leverage their vertical positions to go horizontal or do incumbents exploit horizontal positions?

In How to win at Gen AI, I propose that winners in AI will eventually need to establish a horizontal position.

This is because despite the data specialization needed to win vertically, AI is naturally well positioned to win horizontally. Both assistants and agents can rebundle across multiple use cases and expand horizontally.

If you don’t go horizontal, someone else will. And win.

So the approach to win in Gen AI is to go vertical, develop a control point, and then go horizontal, as shown below:

Have a look at the whole framework in detail:

2. Can incumbents bundle outcomes with existing profit pools?

In How to lose at Gen AI, I lay out a framing on unfair advantages in IoT which I’ve also explained above:

Most incumbents subsidized IoT services and bundled in the connected services with their existing profit pools where they charged for devices.

Clueless startups looking to capture value on connected services tried to subsidize their hardware and were left footing a huge manufacturing bill for subsidizing low quality hardware that took them nowhere.

Incumbents, instead, 'commoditized the complement' and increased the value of their devices by bundling in subsidized connected services - a huge business model advantage.

In whichever workflow you target, do incumbents have a bundling advantage with their existing product? Or can challengers successfully carve out new positions?

Have a look at the overall framework:

“I don’t think we’ve even seen the tip of the iceberg… It’s an alien life form… ”

- David Bowie, speaking about the internet, in 1999

Building in this space?

Opportunities are ripe for both challengers and incumbents. I believe the opportunity is particularly interesting for players that combine model improvements with business model innovation along the lines I’ve shared here. Most of the value in the GenAI value chain will move to these two sources of advantage.

If you are working in this space and this playbook intrigues you, I’d love to connect and discuss further.

@Sangeet - very comprehensive and builds nicely on your previous work. At some point you may want to get to the question of variance of doing the same things across enterprises and how it will impact this framework - because why services should even exist. Why isn't it all software even today. I think it is more than the managerial role that rebundle the work. Will be curious to get your ideas on it

Fantastic approach and thought process Sangeet, appreciate you taking the time out to explain. I do have 1 question - is it not hard for companies to define what a job looks like? At the end of the day, the job is performed by a role (human) and it is not easy to identify the tasks, purely due to the complexity in which large enterprises function.. is it not more advisable to think in terms of business workflows, where more documentation/guidance is available to understand which tasks within a workflow can be automated vs augmented? Sorry if I am missing the point.

Regards,

Dev.